Novel View Synthesis from Single Images with Tiny Latent Diffusion Models

Ethan Chun

Available as a colab notebook here.

Abstract

- Context: View conditioned diffusion models show great potential to improve scene understanding in robotics applications.

- Problem: However, training times are slow and the goals of current diffusion models and while the former focuses on image quality, robotic applications prioritize efficiency.

- Solution: We present a scaled down version of the popular Latent Diffusion Model, trained on the SRNCars dataset. We provide view conditioning to allow for novel view synthesis from single images and demonstrate reasonable image outputs on a single object class dataset. Our model reduces the number of trainable parameters from 860 million in state of the art models to less than 1.7 million parameters and trains in less than four hours on a consumer GPU.

Summary

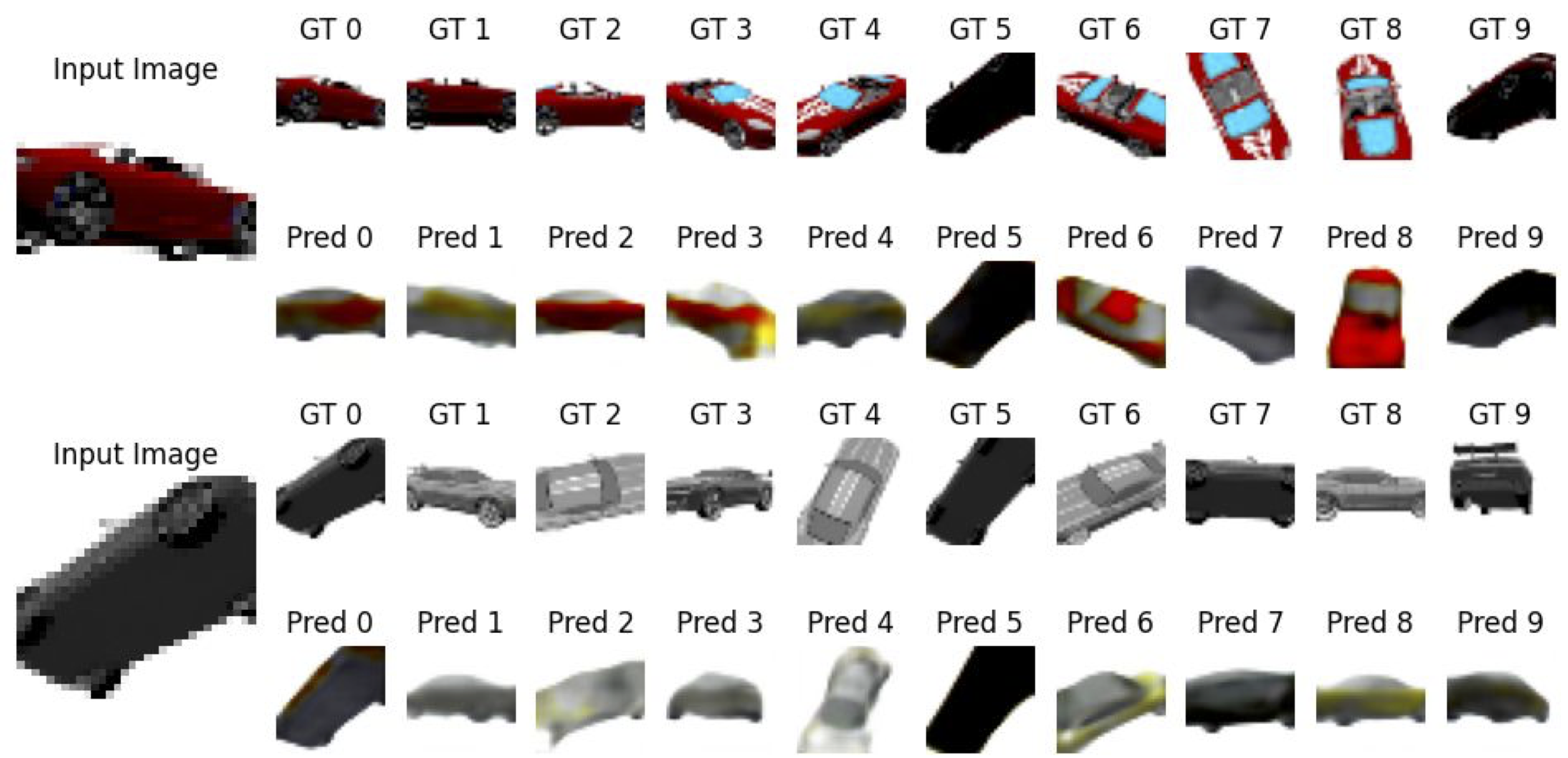

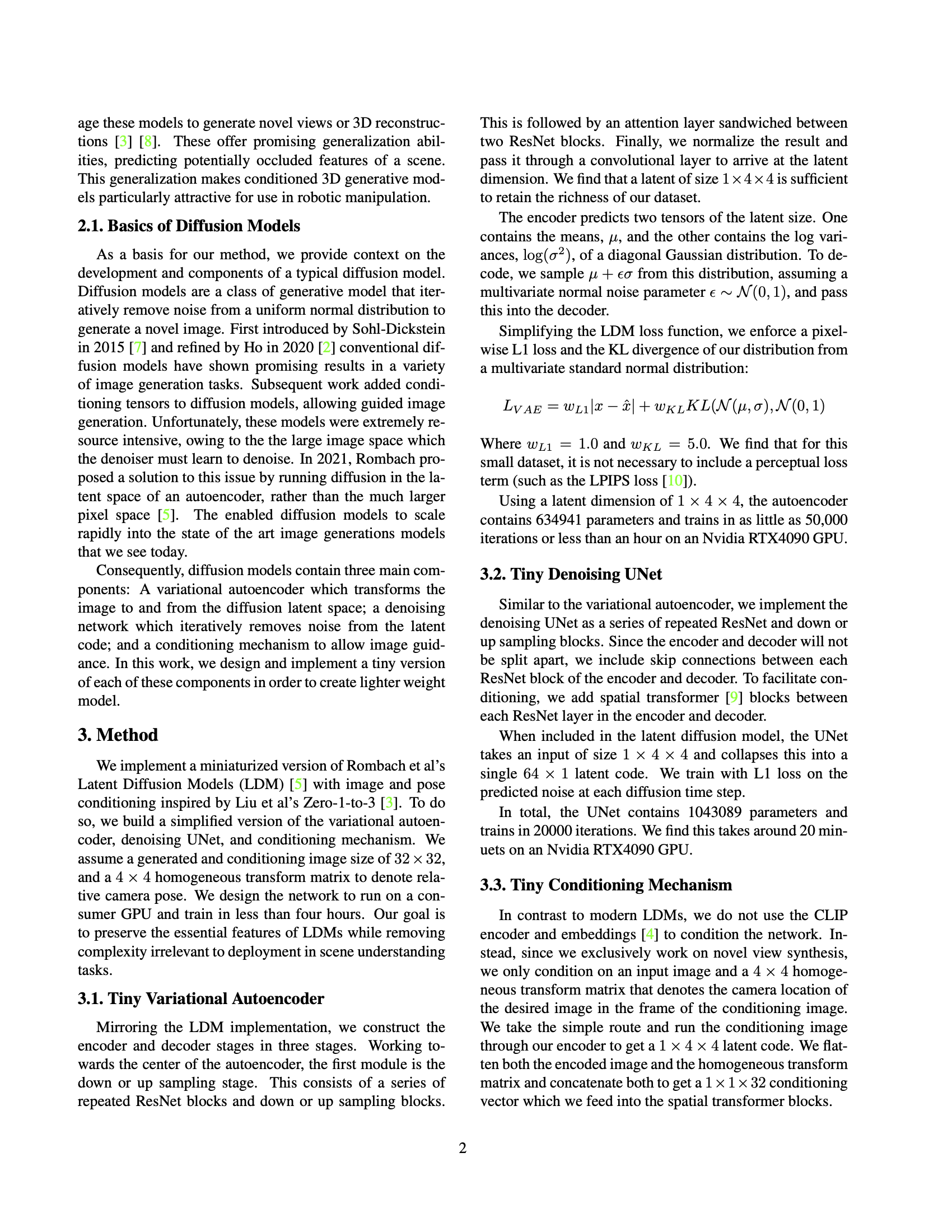

Results

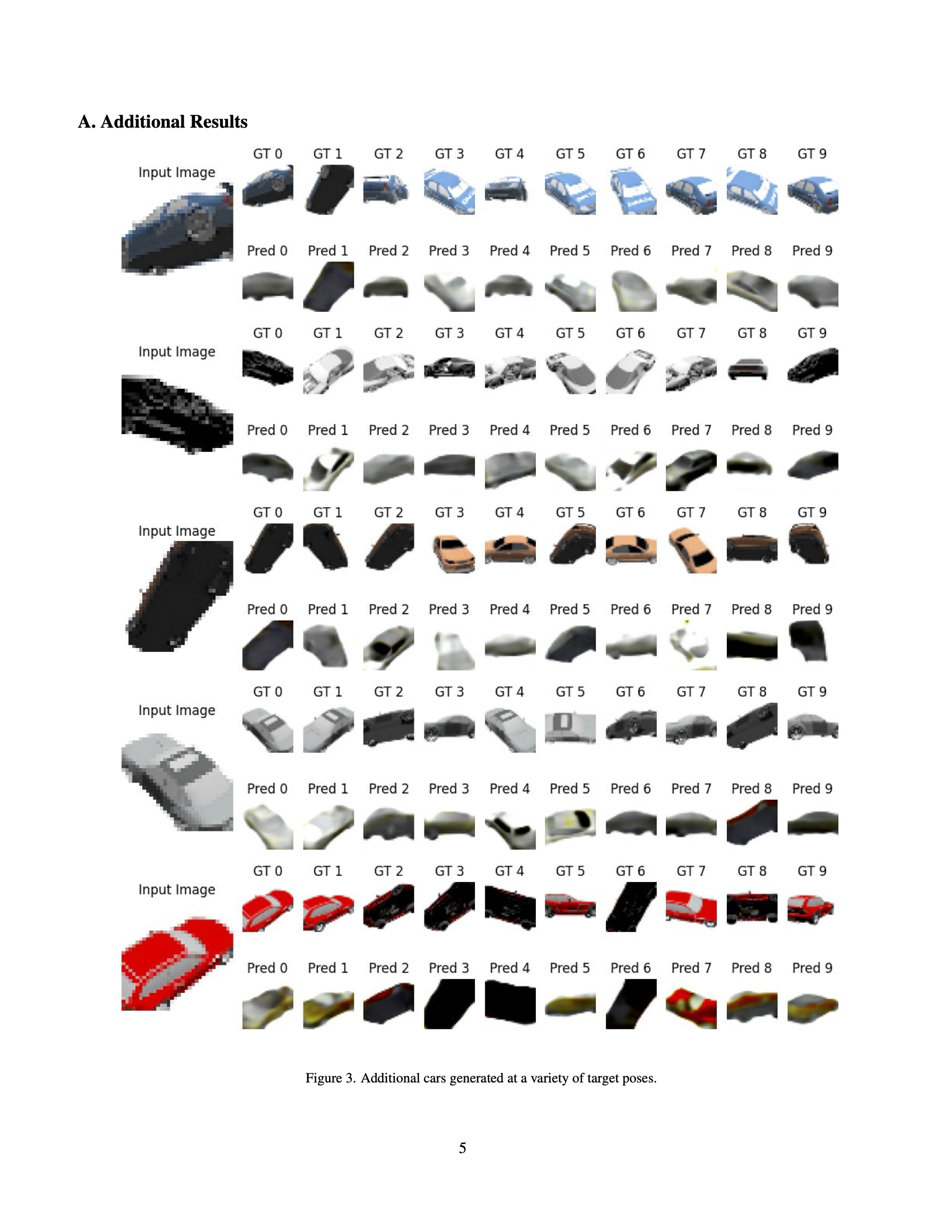

Given a single input image (left) and a conditioning transform (not shown), our tiny latent diffusion model generates novel views (pred) of unseen geometry. Note that even when the model has no information on the top or back of the cars, it can still generate feasible predictions that resemble the unseen ground truth images.

Paper

Please see our paper for additional details on the project.

Webpage created by Ethan Chun and ChatGPT :)